#!usr/bin/env bash: function setupoverlay : ip netns add overlay: ip netns exec overlay ip li ad dev br0 type bridge: ip li add dev vxlan42 type vxlan id 42 l2miss l3miss proxy learning dstport 4789. 各netns内でzebraとospfdを起動します。 Nets Docker For Mac Torrent オペレーション例 ・neighbor確認 2604がOSPFデーモンのポート番号、passwordはコンフィグで設定した'zebra' ・linkのup/down ・疎通確認 その他 Nets Docker For Mac Free. 设置接口的MAC地址,具体使用-mac-address 命令指定或者随机一个。 在网桥的网络地址访问内给容器的eth0一个新的IP地址,设置它的缺省路由为Docker主机在网桥上拥有的IP地址。. The host network configuration only works as expected on Linux systems, beacuase Docker uses a virtual machine under the hood on Mac and Windows, thus the host network in these cases refers to the VM rather than the real host itself. (I have not used a host network on a Windows machine with a Windows based container, so I cannot comment on that.

- Nets Docker For Mac Download

- Nets Docker For Mac Catalina

- Nets Docker For Mac Os

- Nets Docker For Mac Installer

Introduction

At D2SI, we have been using Docker since its very beginning and have been helping many projects go into production. We believe that going into production requires a strong understanding of the technology to be able to debug complex issues, analyze unexpected behaviors or troubleshoot performance degradations. That is why we have tried to understand as best as we can the technical components used by Docker.

This blog post is focused on the Docker network overlays. The Docker network overlay driver relies on several technologies: network namespaces, VXLAN, Netlink and a distributed key-value store. This article will present each of these mechanisms one by one along with their userland tools and show hands-on how they interact together when setting up an overlay to connect containers.

This post is derived from the presentation I gave at DockerCon2017 in Austin. The slides are available here.

All the code used in this post is available on GitHub.

Docker Overlay Networks

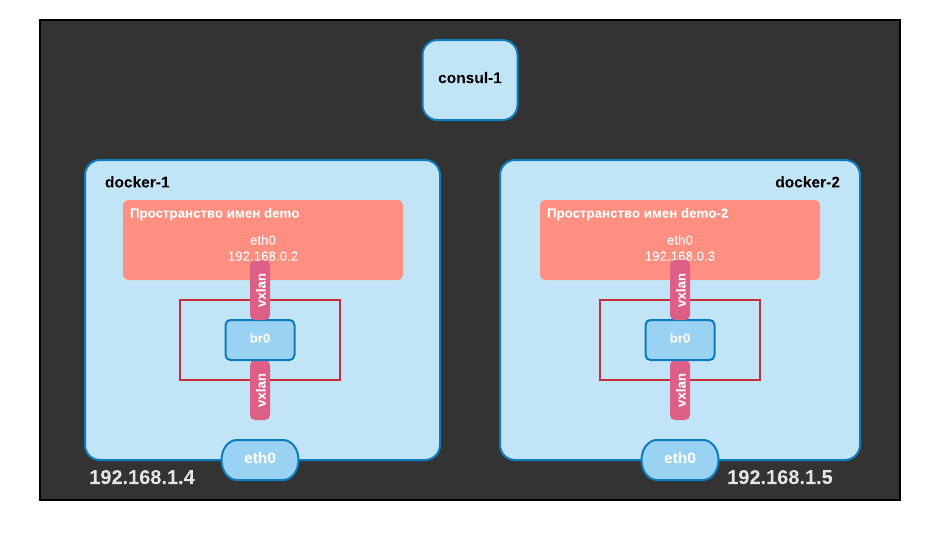

First, we are going to build an overlay network between Docker hosts. In our example, we will do this with three hosts: two running Docker and one running Consul. Docker will use Consul to store the overlay networks metadata that needs to be shared by all the Docker engines: container IPs, MAC addresses and location. Before Docker 1.12, Docker required an external Key-Value store (Etcd or Consul) to create overlay networks and Docker Swarms (now often referred to as “classic Swarm”). Starting with Docker 1.12, Docker can now rely on an internal Key-Value store to create Swarms and overlay networks (“Swarm mode” or “new swarm”). We chose to use Consul because it allows us to look into the keys stored by Docker and understand better the role of the Key-Value store. We are running Consul on a single node but in a real environment we would need a cluster of at least three nodes for resiliency.

In our example, the servers will have the following IP addresses:

- consul: 10.0.0.5

- docker0: 10.0.0.10

- docker1: 10.0.0.0.11

Starting the Consul and Docker services

The first thing we need to do is to start a Consul server. To do this, we simply download Consul from here. We can then start a very minimal Consul service with the following command:

We use the following flags:

- server: start the consul agent in server mode

- dev: create a standalone Consul server without any persistency

- ui: start a small web interface allowing us to easily look at the keys stored by Docker and their values

- client 0.0.0.0: bind all network interfaces for client access (default is 127.0.0.1 only)

To configure the Docker engines to use Consul as an Key-Value store, we start the daemons with the cluster-store option:

The cluster-advertise option specifies which IP to advertise in the cluster for a docker host (this option is not optional). This command assumes that consul resolves to 10.0.0.5 in our case.

If we look at the at the Consul UI, we can see that Docker created some keys, but the network key: http://consul:8500/v1/kv/docker/network/v1.0/network/ is still empty.

You can easily create the same environment in AWS using the terraform setup in the GitHub repository. All the default configuration (in particular the region to use) is in variables.tf. You will need to give a value to the key_pair variable, either using the command line (terraform apply -var key_pair=demo) or by modifying the variables.tf file. The three instances are configured with userdata: consul and docker are installed and started with the good options, an entry is added to /etc/hosts so consul resolves into the IP address of the consul server. When connecting to consul or docker servers, you should use the public IP addresses (given in terraform outputs) and connect with user “admin” (the terraform setup uses a debian AMI).

Creating an Overlay

We can now create an overlay network between our two Docker nodes:

We are using the overlay driver, and are choosing 192.168.0.0/24 as a subnet for the overlay (this parameter is optional but we want to have addresses very different from the ones on the hosts to simplify the analysis).

Let’s check that we configured our overlay correctly by listing networks on both hosts.

Nets Docker For Mac Download

This looks good: both Docker nodes know the demonet network and it has the same id (13fb802253b6) on both hosts.

Let’s now check that our overlay works by creating a container on docker0 and trying to ping it from docker1. On docker0, we create a C0 container, attach it to our overlay, explicitly give it an IP address (192.168.0.100) and make it sleep. On docker1 we create a container attached to the overlay network and running a ping command targeting C0.

We can see that the connectivity between both containers is OK. If we try to ping C0 from docker1, it does not work because docker1 does not know anything about 192.168.0.0/24 which is isolated in the overlay.

Here is what we have built so far:

Under the hood

Now that we have built an overlay let’s try and see what makes it work.

Network configuration of the containers

What is the network configuration of C0 on docker0? We can exec into the container to find out:

We have two interfaces (and the loopback) in the container:

- eth0: configured with an IP in the 192.168.0.0/24 range. This interface is the one in our overlay.

- eth1: configured with an IP in 172.18.0.2/16 range, which we did not configure anywhere

What about the routing configuration?

The routing configuration indicates that the default route is via eth1, which means that this interface can be used to access resources outside of the overlay. We can verify this easily by pinging an external IP address.

Note that it is possible to create an overlay where containers do not have access to external networks using the --internal flag.

Let’s see if we can get more information on these interfaces:

The type of both interfaces is veth. veth interfaces always always come in pair connected with a virtual wire. The two peered veth can be in different network namespaces which allows traffic to move from one namespace to another. These two veth are used to get outside of the container network namespace.

Here is what we have found out so far:

We now need to identify the interfaces peered with each veth.

What is the container connected to?

We can identify the other end of a veth using the ethtool command. However this command is not available in our container. We can execute this command inside our container using “nsenter” which allows us to enter one or several namespaces associated with a process or using “ip netns exec” which relies on iproute to execute a command in a given network namespace. Docker does not create symlinks in the /var/run/netns directory which is where ip netns is looking for network namespaces. This is why we will rely on nsenter for namespaces created by Docker.

To list the network namespaces created by Docker we can simply run:

To use this information, we need to identify the network namespace of containers. We can achieve this by inspecting them, and extracting what we need from the SandboxKey:

We can also execute host commands inside the network namespace of a container (even if this container does not have the command):

Let’s see what are the interface indexes associated with the peers of eth0 and eth1:

We are now looking for interfaces with indexes 7 and 10. We can first look on the host itself:

We can see from this output that we have no trace of interface 7 but we have found interface 10, the peer of eth1. In addition, this interface is plugged on a bridge called “docker_gwbridge”. What is this bridge? If we list the networks managed by docker, we can see that it has appeared in the list:

We can now inspect it:

I removed part of the output to focus on the essential pieces of information:

- this network uses the driver bridge (the same one used by the standard docker bridge, docker0)

- it uses subnet 172.18.0.0/16, which is consistent with eth1

- enable_icc is set to false which means we cannot use this bridge for inter-container communication

- enable_ip_masquerade is set to true, which means the traffic from the container will be NATed to access external networks (which we saw earlier when we successfully pinged 8.8.8.8)

We can verify that inter-container communication is disabled by trying to ping C0 on its eth1 address (172.18.0.2) from another container on docker0 also attached to demonet:

Here is an updated view of what we have found:

What about eth0, the interface connected to the overlay?

The interface peered with eth0 is not in the host network namespace. It must be in another one. If we look again at the network namespaces:

We can see a namespace called “1-13fb802253”. Except for the “1-“, the name of this namespace is the beginning of the network id of our overlay network:

This namespace is clearly related to our overlay network. We can look at the interfaces present in that namespace:

The overlay network namespace contains three interfaces (and lo):

- br0: a bridge

- veth2: a veth interface which is the peer interface of eth0 in our container and which is connected to the bridge

- vxlan0: an interface of type “vxlan” which is also connected to the bridge

The vxlan interface is clearly where the “overlay magic” is happening and we are going to look at it in details but let’s update our diagram first:

Nets Docker For Mac Catalina

Conclusion

This concludes part 1 of this article. In part 2, we will focus on VXLAN: what is this protocol and how it is used by Docker.

Nets Docker For Mac Os

- DEBU[0265] Calling POST /v1.23/containers/create?name=c1

- DEBU[0265] form data: {'AttachStderr':false,'AttachStdin':false,'AttachStdout':false,'Cmd':['sh'],'Domainname':','Entrypoint':null,'Env':[],'HostConfig':{'AutoRemove':false,'Binds':null,'BlkioBps':0,'BlkioDeviceReadBps':null,'BlkioDeviceReadIOps':null,'BlkioDeviceWriteBps':null,'BlkioDeviceWriteIOps':null,'BlkioIOps':0,'BlkioWeight':0,'BlkioWeightDevice':null,'CapAdd':null,'CapDrop':null,'Cgroup':','CgroupParent':','ConsoleSize':[0,0],'ContainerIDFile':','CpuCount':0,'CpuPercent':0,'CpuPeriod':0,'CpuQuota':0,'CpuShares':0,'CpusetCpus':','CpusetMems':','Devices':[],'DiskQuota':0,'Dns':[],'DnsOptions':[],'DnsSearch':[],'ExtraHosts':null,'GroupAdd':null,'IpcMode':','Isolation':','KernelMemory':0,'Links':null,'LogConfig':{'Config':{},'Type':'},'Memory':0,'MemoryReservation':0,'MemorySwap':0,'MemorySwappiness':-1,'NetworkMode':'demo','OomKillDisable':false,'OomScoreAdj':0,'PidMode':','PidsLimit':0,'PortBindings':{},'Privileged':false,'PublishAllPorts':false,'ReadonlyRootfs':false,'RestartPolicy':{'MaximumRetryCount':0,'Name':'no'},'SandboxSize':0,'SecurityOpt':null,'ShmSize':0,'StorageOpt':null,'UTSMode':','Ulimits':null,'UsernsMode':','VolumeDriver':','VolumesFrom':null},'Hostname':','Image':'alpine','Labels':{},'NetworkingConfig':{'EndpointsConfig':{}},'OnBuild':null,'OpenStdin':true,'StdinOnce':false,'Tty':true,'User':','Volumes':{},'WorkingDir':'}

- DEBU[0265] container mounted via layerStore: /var/lib/docker/296608.296608/aufs/mnt/90df06068fac304793bdaa57204e7b7b1da70de500023788ac61c46db71946e0

- DEBU[0265] Calling POST /v1.23/containers/35600e201ecbd508b254b42b701ca96386182e274894d62478f14819f71aea38/start

- DEBU[0265] container mounted via layerStore: /var/lib/docker/296608.296608/aufs/mnt/90df06068fac304793bdaa57204e7b7b1da70de500023788ac61c46db71946e0

- DEBU[0265] Assigning addresses for endpoint c1's interface on network demo

- DEBU[0265] RequestAddress(GlobalDefault/10.0.0.0/24, <nil>, map[])

- DEBU[0265] Assigning addresses for endpoint c1's interface on network demo

- DEBU[0265] Allocating IPv4 pools for network docker_gwbridge (1d3362ae7a767e90652d7feb46f79d61fdfbfe002457a19fe9391ab8bbade309)

- DEBU[0265] RequestPool(LocalDefault, , , map[], false)

- DEBU[0265] RequestAddress(LocalDefault/172.18.0.0/16, <nil>, map[RequestAddressType:com.docker.network.gateway])

- DEBU[0265] Received user event name:jl 20.20.20.1 752d6d03b04fd1a10626cfbc663080034efca3786af8cc679964a43bcfeb28b3 81a0074432fa02a51a190c91f5144167dd124e82b02e69a8cc90ab5c00385f07, payload:join 10.0.0.2 255.255.255.0 02:42:0a:00:00:02

- DEBU[0265] Parsed data = 752d6d03b04fd1a10626cfbc663080034efca3786af8cc679964a43bcfeb28b3/81a0074432fa02a51a190c91f5144167dd124e82b02e69a8cc90ab5c00385f07/20.20.20.1/10.0.0.2/255.255.255.0/02:42:0a:00:00:02

- DEBU[0265] Setting bridge mac address to 02:42:8f:50:4b:fc

- DEBU[0265] Assigning address to bridge interface docker_gwbridge: 172.18.0.1/16

- DEBU[0265] /sbin/iptables, [--wait -t nat -C POSTROUTING -s 172.18.0.0/16 ! -o docker_gwbridge -j MASQUERADE]

- DEBU[0265] /sbin/iptables, [--wait -t nat -I POSTROUTING -s 172.18.0.0/16 ! -o docker_gwbridge -j MASQUERADE]

- DEBU[0265] /sbin/iptables, [--wait -t nat -C DOCKER -i docker_gwbridge -j RETURN]

- DEBU[0265] /sbin/iptables, [--wait -t nat -I DOCKER -i docker_gwbridge -j RETURN]

- DEBU[0265] /sbin/iptables, [--wait -D FORWARD -i docker_gwbridge -o docker_gwbridge -j ACCEPT]

- DEBU[0265] /sbin/iptables, [--wait -t filter -C FORWARD -i docker_gwbridge -o docker_gwbridge -j DROP]

- DEBU[0265] /sbin/iptables, [--wait -A FORWARD -i docker_gwbridge -o docker_gwbridge -j DROP]

- DEBU[0265] /sbin/iptables, [--wait -t filter -C FORWARD -i docker_gwbridge ! -o docker_gwbridge -j ACCEPT]

- DEBU[0265] /sbin/iptables, [--wait -I FORWARD -i docker_gwbridge ! -o docker_gwbridge -j ACCEPT]

- DEBU[0265] /sbin/iptables, [--wait -t filter -C FORWARD -o docker_gwbridge -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT]

- DEBU[0265] /sbin/iptables, [--wait -I FORWARD -o docker_gwbridge -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT]

- DEBU[0265] /sbin/iptables, [--wait -t nat -C PREROUTING -m addrtype --dst-type LOCAL -j DOCKER]

- DEBU[0265] /sbin/iptables, [--wait -t nat -C PREROUTING -m addrtype --dst-type LOCAL -j DOCKER]

- DEBU[0265] /sbin/iptables, [--wait -t nat -C OUTPUT -m addrtype --dst-type LOCAL -j DOCKER ! --dst 127.0.0.0/8]

- DEBU[0265] /sbin/iptables, [--wait -t nat -C OUTPUT -m addrtype --dst-type LOCAL -j DOCKER ! --dst 127.0.0.0/8]

- DEBU[0265] /sbin/iptables, [--wait -t filter -C FORWARD -o docker_gwbridge -j DOCKER]

- DEBU[0265] /sbin/iptables, [--wait -I FORWARD -o docker_gwbridge -j DOCKER]

- DEBU[0265] /sbin/iptables, [--wait -t filter -C FORWARD -j DOCKER-ISOLATION]

- DEBU[0265] /sbin/iptables, [--wait -D FORWARD -j DOCKER-ISOLATION]

- DEBU[0265] /sbin/iptables, [--wait -I FORWARD -j DOCKER-ISOLATION]

- DEBU[0265] /sbin/iptables, [--wait -t filter -C DOCKER-ISOLATION -i docker_gwbridge -o docker0 -j DROP]

- DEBU[0265] /sbin/iptables, [--wait -I DOCKER-ISOLATION -i docker_gwbridge -o docker0 -j DROP]

- DEBU[0265] /sbin/iptables, [--wait -t filter -C DOCKER-ISOLATION -i docker0 -o docker_gwbridge -j DROP]

- DEBU[0265] /sbin/iptables, [--wait -I DOCKER-ISOLATION -i docker0 -o docker_gwbridge -j DROP]

- DEBU[0265] releasing IPv4 pools from network docker_gwbridge (1d3362ae7a767e90652d7feb46f79d61fdfbfe002457a19fe9391ab8bbade309)

- DEBU[0265] ReleaseAddress(LocalDefault/172.18.0.0/16, 172.18.0.1)

- DEBU[0265] ReleasePool(LocalDefault/172.18.0.0/16)

- WARN[0265] Could not rollback container connection to network demo

- DEBU[0265] Received user event name:jl 20.20.20.1 752d6d03b04fd1a10626cfbc663080034efca3786af8cc679964a43bcfeb28b3 81a0074432fa02a51a190c91f5144167dd124e82b02e69a8cc90ab5c00385f07, payload:leave 10.0.0.2 255.255.255.0 02:42:0a:00:00:02

- DEBU[0265] Parsed data = 752d6d03b04fd1a10626cfbc663080034efca3786af8cc679964a43bcfeb28b3/81a0074432fa02a51a190c91f5144167dd124e82b02e69a8cc90ab5c00385f07/20.20.20.1/10.0.0.2/255.255.255.0/02:42:0a:00:00:02

- DEBU[0265] Releasing addresses for endpoint c1's interface on network demo

- DEBU[0265] ReleaseAddress(GlobalDefault/10.0.0.0/24, 10.0.0.2)

- failed to umount /var/lib/docker/296608.296608/containers/35600e201ecbd508b254b42b701ca96386182e274894d62478f14819f71aea38/shm: no such file or directory

- ERRO[0265] Handler for POST /v1.23/containers/35600e201ecbd508b254b42b701ca96386182e274894d62478f14819f71aea38/start returned error: error creating external connectivity network: cannot restrict inter-container communication: please ensure that br_netfilter kernel module is loaded